Embedded Learning and Optimization for Interaction-aware Model Predictive Control

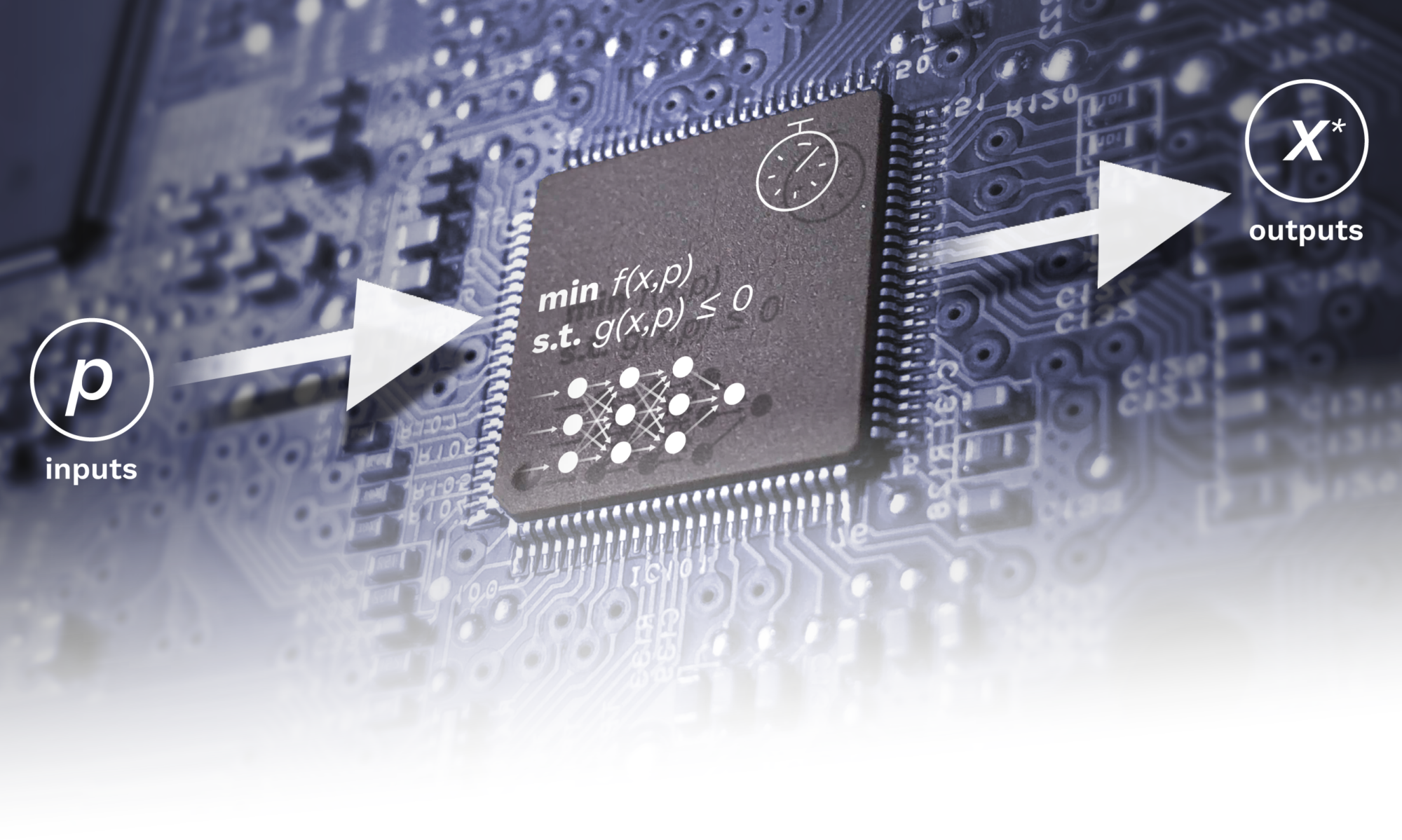

The goal of this project is to develop embedded optimization and online learning algorithms for interaction-aware Model Predictive Control (MPC) for autonomous navigation in uncertain environments. In this project, an interaction-aware MPC formulation integrated with online learning has been developed. The integration of online learning in the MPC formulation allows for the customization of the prediction model, tailoring it to the specific dynamics of the system in interaction. This framework serves as the fundation for the further development of embedded learning and optimization methods.

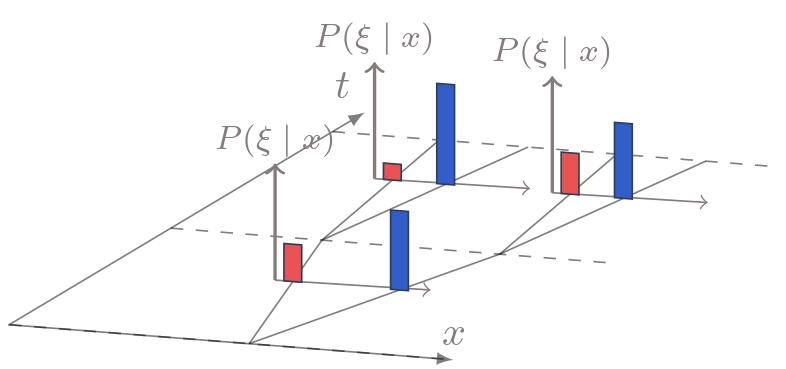

A key contribution is our learning algorithm that identifies parameters in switching system models with state-dependent transition probabilities. Such a model has been demonstrated to effectively capture nonlinear dynamics. However, the state-dependent distribution creates a nonconvex stochastic control problem.

To address this challenge, we propose a risk-sensitive MPC framework specifically designed for such stochastic systems. This risk-sensitive approach offers two significant advantages:

- It enables more natural human-robot interactions

- It introduces additional problem structure that allows us to design customized algorithms for solving the nonconvex optimal control problems efficiently

Interaction-aware Model Predictive Control for Autonomous Driving

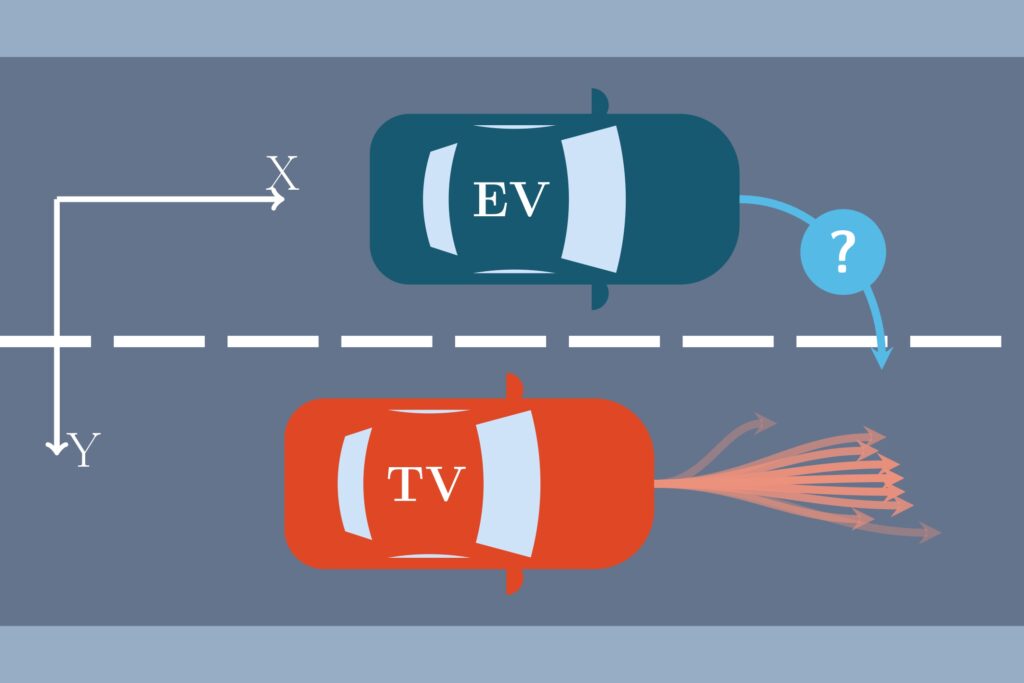

We propose an interaction-aware stochastic model predictive control (MPC) strategy for lane merging tasks in automated driving. The MPC strategy is integrated with an online learning framework, which models a given driver’s cooperation level as an unknown parameter in a state-dependent probability distribution. The online learning framework adaptively estimates the surrounding vehicle’s cooperation level with the vehicle’s past state trajectory and combines this with a kinematic vehicle model to predict the distribution of a multimodal future state trajectory. Learning is conducted using logistic regression, enabling fast online computations. The multi-future prediction is used in the MPC algorithm to compute the optimal control input while satisfying safety constraints. We demonstrate our algorithm in an interactive lane changing scenario with drivers in different randomly selected cooperation levels.

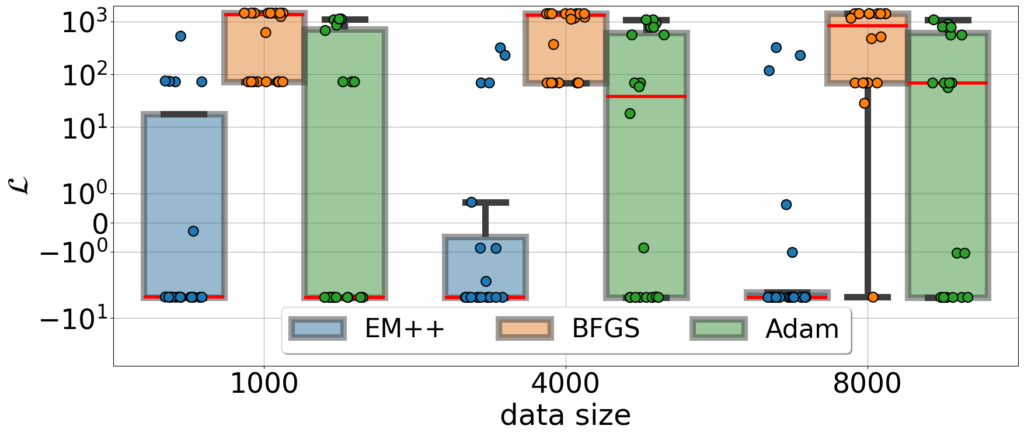

EM++: A parameter learning framework for stochastic switching systems

Renzi Wang, Alexander Bodard, Mathijs Schuurmans, and Panagiotis Patrinos. “EM++: A parameter learning framework for stochastic switching systems“. 2024, submitted for publication.

Obtaining a realistic and computationally efficient model will significantly enhance the performance of a model predictive controller. This is especially true for complex scenarios where the system being controlled must interact with other systems. This work proposes a general switching dynamical system model, and a custom majorization-minimization-based algorithm EM++ for identifying its parameters. For certain families of distributions, such as Gaussian distributions, this algorithm reduces to the well-known expectation-maximization method. We prove global convergence of the algorithm under suitable assumptions, thus addressing an important open issue in the switching system identification literature. The effectiveness of both the proposed model and algorithm is validated through extensive numerical experiments

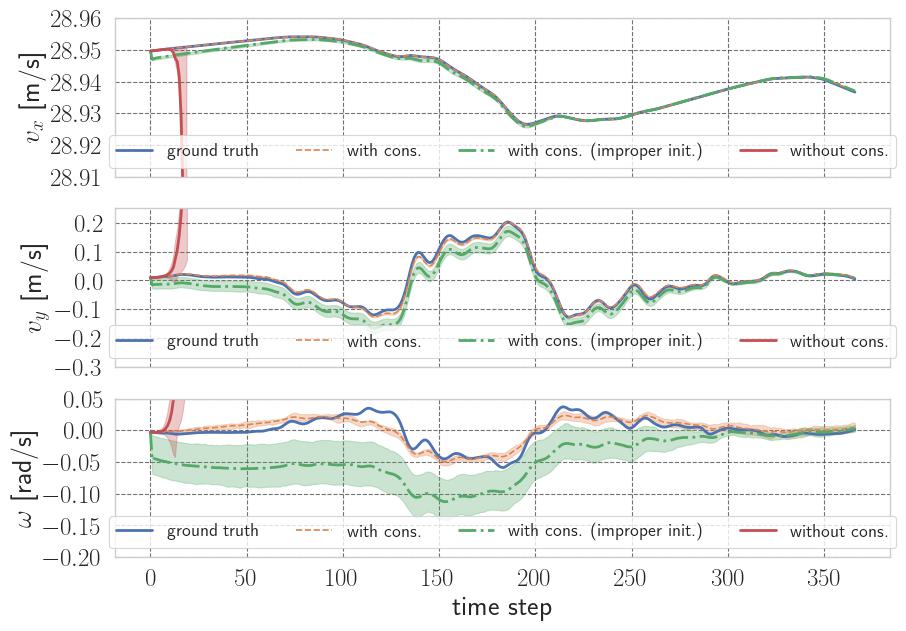

Imitation Learning from Observations: An Autoregressive Mixture of Experts Approach

Renzi Wang, Flavia Sofia Acerbo, Tong Duy Son, and Panagiotis Patrinos. “Imitation Learning from Observations: An Autoregressive Mixture of Experts Approach“. 2025 European Control Conference (ECC). IEEE, 2025.

This paper presents a novel approach to imitation learning from observations, where an autoregressive mixture of experts model is deployed to fit the underlying policy. The parameters of the model are learned via a two-stage framework. By leveraging the existing dynamics knowledge, the first stage of the framework estimates the control input sequences and hence reduces the problem complexity. At the second stage, the policy is learned by solving a regularized maximum-likelihood estimation problem using the estimated control input sequences. We further extend the learning procedure by incorporating a Lyapunov stability constraint to ensure asymptotic stability of the identified model, for accurate multi-step predictions. The effectiveness of the proposed framework is validated using two autonomous driving datasets collected from human demonstrations, demonstrating its practical applicability in modelling complex nonlinear dynamics.

Risk-Sensitive Model Predictive Control for Interaction-Aware Planning -- A Sequential Convexification Algorithm

Renzi Wang, Mathijs Schuurmans, and Panagiotis Patrinos. “Risk-Sensitive Model Predictive Control for Interaction-Aware Planning

— A Sequential Convexification Algorithm —”. 2025, submitted for publication.

This paper considers risk-sensitive model predictive control for stochastic systems with a decision-dependent distribution. This class of systems is commonly found in human-robot interaction scenarios. We derive computationally tractable convex upper bounds to both the objective function, and to frequently used penalty terms for collision avoidance, allowing us to efficiently solve the generally nonconvex optimal control problem as a sequence of convex problems. Simulations of a robot navigating a corridor demonstrate the effectiveness and the computational advantage of the proposed approach.