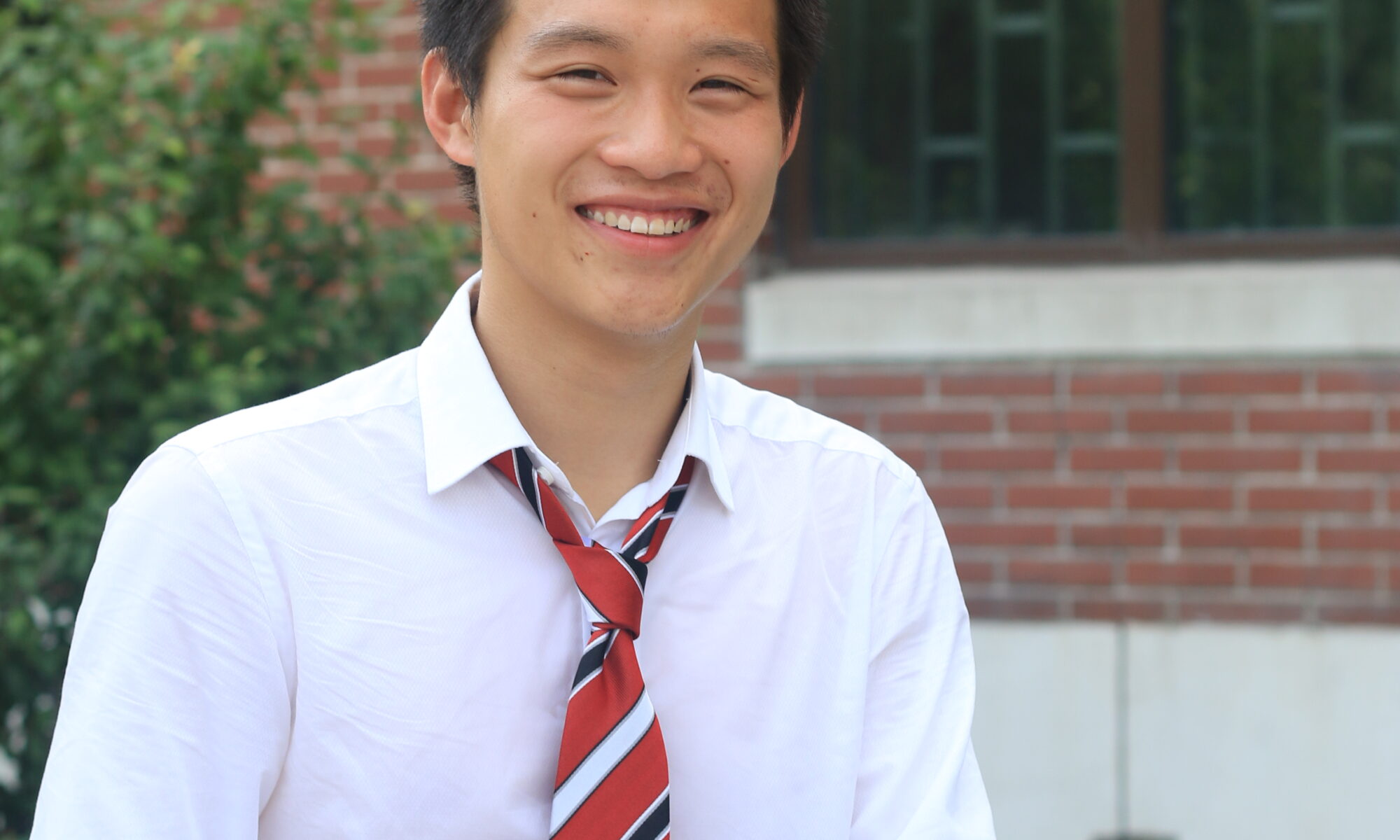

PhD Candidate in Computer Science

Neurobotics Lab, Department of Computer Science

University of Freiburg

Yuan Zhang received the B.Eng degree in Electronic Engineering from Tsinghua University in 2017, and the M.S.c degree in Machine Learning at University College London in 2018. After graduation, he come back to China and started to work on applying reinforcement learning in Natural Language Processing tasks including dialog policy learning and weak supervision learning in a startup called Laiye. His research interest lies in reinforcement learning, especially its applications in real-world scenarios (e.g. dialogue systems, games, robotics).

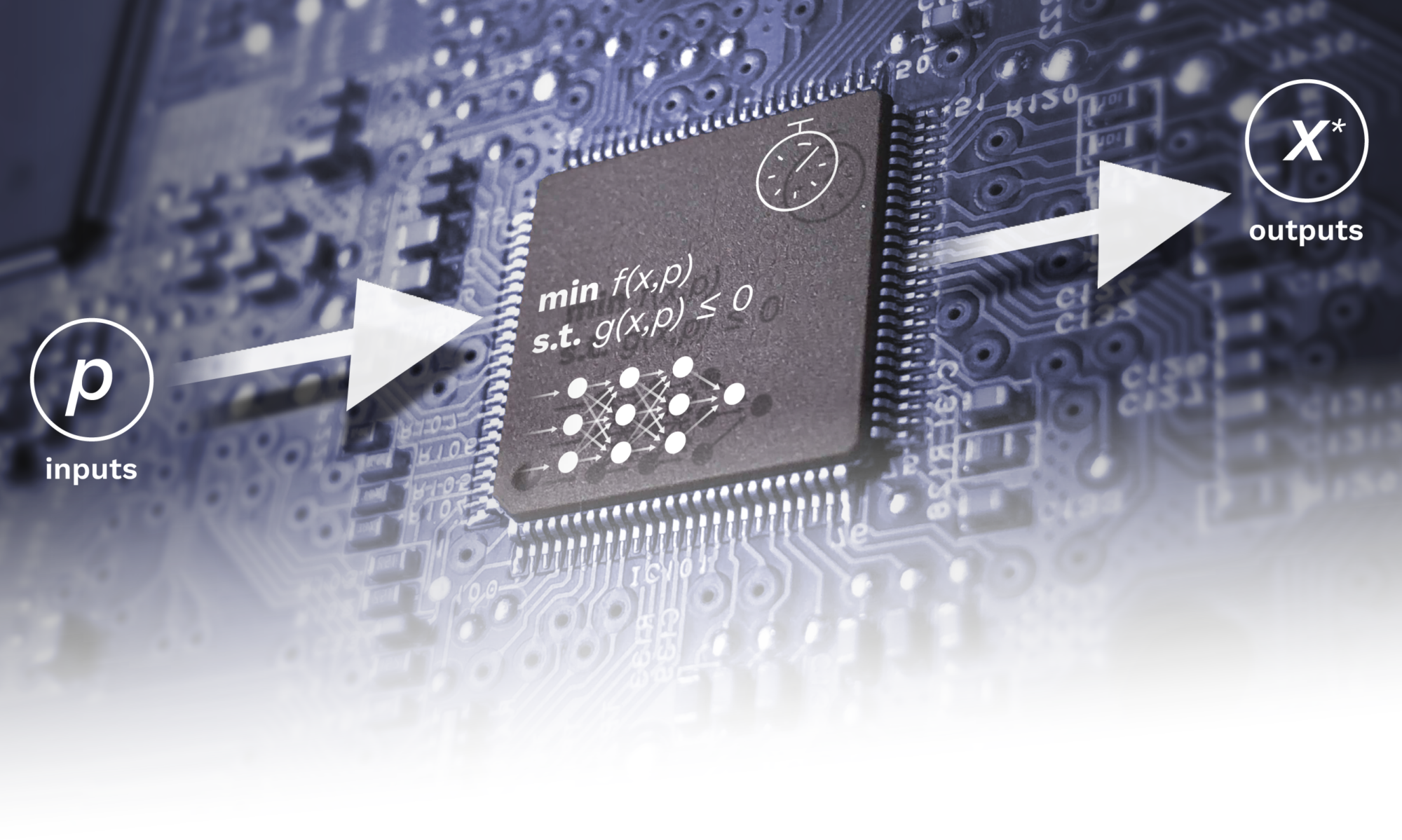

Project description

Deep Learning has brought significant progress in a variety of applications of machine learning in recent years. As powerful non-linear function approximators, their potential for use in learning-based control applications is very appealing. They benefit from large amounts of data, and present a very scalable solution e.g. for learning hard-to-model plant dynamics from data. Currently, the most widely-used method of training these deep networks are maximum likelihood approaches, which only give a point estimate of the parameters that maximize the likelihood of the input data, and do not quantify how certain the model is about its predictions. The uncertainty of the model is, however, a crucial factor in robust and risk-averse control applications. This is especially important when the learned dynamics model is to be used to predict over a longer horizon, resulting in compounding errors of inaccurate models. Bayesian Deep Learning approaches offer a promising alternative that allows to quantify model uncertainty explicitly, but many current approaches are difficult to scale, have high computational overhead, and poorly calibrated uncertainties. The objective for the ESR in this project will be to develop new Bayesian Deep Learning approaches, including recurrent architectures, that address these issues and are well suited for embedded control applications with their challenging constraints on computational complexity, memory, and real-time demands.

Publications

Zhang, Yuan; Hoffman, Jasper; Boedecker, Joschka

UDUC: An Uncertainty-driven Approach for Learning-based Robust Control Proceedings Article

In: ECAI 2024 - 27th European Conference on Artificial Intelligence - Including 13th Conference on Prestigious Applications of Intelligent Systems (PAIS 2024), pp. 4402-4409, IOS Press, Santiago de Compostela, Spain, 2024.

@inproceedings{zhang2024uduc,

title = {UDUC: An Uncertainty-driven Approach for Learning-based Robust Control},

author = {Yuan Zhang and Jasper Hoffman and Joschka Boedecker},

url = {https://arxiv.org/abs/2405.02598},

doi = {10.3233/FAIA241018},

year = {2024},

date = {2024-10-24},

urldate = {2024-10-24},

booktitle = {ECAI 2024 - 27th European Conference on Artificial Intelligence - Including 13th Conference on Prestigious Applications of Intelligent Systems (PAIS 2024)},

volume = {392},

pages = {4402-4409},

publisher = {IOS Press},

address = {Santiago de Compostela, Spain},

series = {Frontiers in Artificial Intelligence and Applications},

abstract = {Learning-based techniques have become popular in both model predictive control (MPC) and reinforcement learning (RL). Probabilistic ensemble (PE) models offer a promising approach for modelling system dynamics, showcasing the ability to capture uncertainty and scalability in high-dimensional control scenarios. However, PE models are susceptible to mode collapse, resulting in non-robust control when faced with environments slightly different from the training set. In this paper, we introduce the uncertainty-driven robust control (UDUC) loss as an alternative objective for training PE models, drawing inspiration from contrastive learning. We analyze the robustness of the UDUC loss through the lens of robust optimization and evaluate its performance on the challenging real-world reinforcement learning (RWRL) benchmark, which involves significant environmental mismatches between the training and testing environments.},

keywords = {},

pubstate = {published},

tppubtype = {inproceedings}

}

Schulz, Felix; Hoffman, Jasper; Zhang, Yuan; Boedecker, Joschka

Learning When to Trust the Expert for Guided Exploration in RL Workshop

2024, (ICML 2024 Workshop: Foundations of Reinforcement Learning and Control -- Connections and Perspectives).

@workshop{schulz2024learning,

title = {Learning When to Trust the Expert for Guided Exploration in RL},

author = {Felix Schulz and Jasper Hoffman and Yuan Zhang and Joschka Boedecker },

url = {https://openreview.net/forum?id=QkTANn4mRa},

year = {2024},

date = {2024-08-07},

urldate = {2025-08-07},

abstract = {Reinforcement learning (RL) algorithms often rely on trial and error for exploring environments, leading to local minima and high sample inefficiency during training. In many cases, leveraging prior knowledge can efficiently construct expert policies, e.g. model predictive control (MPC) techniques. However, the expert might not be optimal and thus, when used as a prior, might introduce bias that can harm the control performance. Thus, in this work, we propose a novel RL method based on a simple options framework that only uses the expert to guide the exploration during training. The exploration is controlled by a learned high-level policy that can decide to follow either an expert policy or a learned low-level policy. In that sense, the high-level skip policy learns when to trust the expert for exploration. As we aim at deploying the low-level policy without accessing the expert after training, we increasingly regularize the usage of the expert during training, to reduce the covariate shift problem. Using different environments combined with potentially sub-optimal experts derived from MPC or RL, we find that our method improves over sub-optimal experts and significantly improves the sample efficiency.},

note = {ICML 2024 Workshop: Foundations of Reinforcement Learning and Control -- Connections and Perspectives},

keywords = {},

pubstate = {published},

tppubtype = {workshop}

}

Zhang, Yuan; Yang, Shaohui; Ohtsuka, Toshiyuki; Jones, Colin; Boedecker, Joschka

Latent Linear Quadratic Regulator for Robotic Control Tasks Working paper

2024, (RSS 2024 Workshop on Koopman Operators in Robotics).

@workingpaper{zhang2024latent,

title = {Latent Linear Quadratic Regulator for Robotic Control Tasks},

author = {Yuan Zhang and Shaohui Yang and Toshiyuki Ohtsuka and Colin Jones and Joschka Boedecker},

url = {https://arxiv.org/abs/2407.11107},

year = {2024},

date = {2024-07-01},

urldate = {2024-07-01},

booktitle = {RSS 2024 Workshop on Koopman Operators in Robotics},

abstract = {Model predictive control (MPC) has played a more crucial role in various robotic control tasks, but its high computational requirements are concerning, especially for nonlinear dynamical models. This paper presents a latent linear quadratic regulator (LaLQR) that maps the state space into a latent space, on which the dynamical model is linear and the cost function is quadratic, allowing the efficient application of LQR. We jointly learn this alternative system by imitating the original MPC. Experiments show LaLQR's superior efficiency and generalization compared to other baselines.},

note = {RSS 2024 Workshop on Koopman Operators in Robotics},

keywords = {},

pubstate = {published},

tppubtype = {workingpaper}

}

Zhang, Yuan; Deekshith, Umashankar; Wang, Jianhong; Boedecker, Joschka

LCPPO: An Efficient Multi-agent Reinforcement Learning Algorithm on Complex Railway Network Proceedings Article

In: 34th International Conference on Automated Planning and Scheduling, 2024.

@inproceedings{zhanglcppo,

title = {LCPPO: An Efficient Multi-agent Reinforcement Learning Algorithm on Complex Railway Network},

author = {Yuan Zhang and Umashankar Deekshith and Jianhong Wang and Joschka Boedecker},

url = {https://openreview.net/forum?id=gylH3hNASm},

year = {2024},

date = {2024-05-09},

urldate = {2024-05-09},

booktitle = {34th International Conference on Automated Planning and Scheduling},

keywords = {},

pubstate = {published},

tppubtype = {inproceedings}

}

Wang, Jianhong; Li, Yang; Zhang, Yuan; Pan, Wei; Kaski, Samuel

Open Ad Hoc Teamwork with Cooperative Game Theory Proceedings Article

In: Forty-first International Conference on Machine Learning, 2024.

@inproceedings{wang2024open,

title = {Open Ad Hoc Teamwork with Cooperative Game Theory},

author = {Jianhong Wang and Yang Li and Yuan Zhang and Wei Pan and Samuel Kaski},

url = {https://openreview.net/forum?id=RlibRvH4B4},

year = {2024},

date = {2024-05-09},

urldate = {2024-05-09},

booktitle = {Forty-first International Conference on Machine Learning},

keywords = {},

pubstate = {published},

tppubtype = {inproceedings}

}

Shengchao, Yan; Zhang, Yuan; Zhang, Bohe; Boedecker, Joschka; Burgard, Wolfram

Learning Continuous Control with Geometric Regularity from Robot Intrinsic Symmetry Proceedings Article

In: 2024 IEEE International Conference on Robotics and Automation ICRA, 2024.

@inproceedings{yan2023geometricb,

title = {Learning Continuous Control with Geometric Regularity from Robot Intrinsic Symmetry},

author = {Yan Shengchao and Yuan Zhang and Bohe Zhang and Joschka Boedecker and Wolfram Burgard},

url = {https://arxiv.org/abs/2306.16316},

year = {2024},

date = {2024-05-09},

urldate = {2024-05-09},

booktitle = {2024 IEEE International Conference on Robotics and Automation ICRA},

keywords = {},

pubstate = {published},

tppubtype = {inproceedings}

}

Zhang, Yuan; Wang, Jianhong; Boedecker, Joschka

Robust Reinforcement Learning in Continuous Control Tasks with Uncertainty Set Regularization Proceedings Article

In: 7th Annual Conference on Robot Learning, 2023.

@inproceedings{zhang2023robust,

title = {Robust Reinforcement Learning in Continuous Control Tasks with Uncertainty Set Regularization},

author = {Yuan Zhang and Jianhong Wang and Joschka Boedecker},

url = {https://openreview.net/forum?id=keAPCON4jHC},

year = {2023},

date = {2023-10-16},

urldate = {2023-10-16},

booktitle = {7th Annual Conference on Robot Learning},

abstract = {Reinforcement learning (RL) is recognized as lacking generalization and robustness under environmental perturbations, which excessively restricts its application for real-world robotics. Prior work claimed that adding regularization to the value function is equivalent to learning a robust policy under uncertain transitions. Although the regularization-robustness transformation is appealing for its simplicity and efficiency, it is still lacking in continuous control tasks. In this paper, we propose a new regularizer named Uncertainty Set Regularizer (USR), to formulate the uncertainty set on the parametric space of a transition function. To deal with unknown uncertainty sets, we further propose a novel adversarial approach to generate them based on the value function. We evaluate USR on the Real-world Reinforcement Learning (RWRL) benchmark and the Unitree A1 Robot, demonstrating improvements in the robust performance of perturbed testing environments and sim-to-real scenarios.},

keywords = {},

pubstate = {published},

tppubtype = {inproceedings}

}

Yan, Schengchao; Zhang, Yuan; Zhang, Baohe; Boedecker, Joschka; Burgard, Wolfram

Geometric Regularity with Robot Intrinsic Symmetry in Reinforcement Learning Workshop

RSS 2023 Workshop on Symmetries in Robot Learning, 2023.

@workshop{yan2023geometric,

title = {Geometric Regularity with Robot Intrinsic Symmetry in Reinforcement Learning},

author = {Schengchao Yan and Yuan Zhang and Baohe Zhang and Joschka Boedecker and Wolfram Burgard},

url = {https://doi.org/10.48550/arXiv.2306.16316},

year = {2023},

date = {2023-06-28},

urldate = {2023-06-28},

booktitle = {RSS 2023 Workshop on Symmetries in Robot Learning},

abstract = {Geometric regularity, which leverages data symmetry, has been successfully incorporated into deep learning architectures such as CNNs, RNNs, GNNs, and Transformers. While this concept has been widely applied in robotics to address the curse of dimensionality when learning from high-dimensional data, the inherent reflectional and rotational symmetry of robot structures has not been adequately explored. Drawing inspiration from cooperative multi-agent reinforcement learning, we introduce novel network structures for deep learning algorithms that explicitly capture this geometric regularity. Moreover, we investigate the relationship between the geometric prior and the concept of Parameter Sharing in multi-agent reinforcement learning. Through experiments conducted on various challenging continuous control tasks, we demonstrate the significant potential of the proposed geometric regularity in enhancing robot learning capabilities.},

keywords = {},

pubstate = {published},

tppubtype = {workshop}

}

Zhang, Yuan; Boedecker, Joschka; Li, Chuxuan; Zhou, Guyue

Incorporating Recurrent Reinforcement Learning into Model Predictive Control for Adaptive Control in Autonomous Driving Working paper

2023.

@workingpaper{zhang2023incorporating,

title = {Incorporating Recurrent Reinforcement Learning into Model Predictive Control for Adaptive Control in Autonomous Driving},

author = {Yuan Zhang and Joschka Boedecker and Chuxuan Li and Guyue Zhou},

doi = {https://doi.org/10.48550/arXiv.2301.13313},

year = {2023},

date = {2023-04-27},

urldate = {2023-04-27},

abstract = {Model Predictive Control (MPC) is attracting tremendous attention in the autonomous driving task as a powerful control technique. The success of an MPC controller strongly depends on an accurate internal dynamics model. However, the static parameters, usually learned by system identification, often fail to adapt to both internal and external perturbations in real-world scenarios. In this paper, we firstly (1) reformulate the problem as a Partially Observed Markov Decision Process (POMDP) that absorbs the uncertainties into observations and maintains Markov property into hidden states; and (2) learn a recurrent policy continually adapting the parameters of the dynamics model via Recurrent Reinforcement Learning (RRL) for optimal and adaptive control; and (3) finally evaluate the proposed algorithm (referred as MPC-RRL) in CARLA simulator and leading to robust behaviours under a wide range of perturbations.},

keywords = {},

pubstate = {published},

tppubtype = {workingpaper}

}

Wang, Jianhong; Wang, Jinxin; Zhang, Yuan; Gu, Yunjie; Kim, Tae-Kyun

SHAQ: Incorporating Shapley Value Theory into Multi-Agent Q-Learning Proceedings Article

In: Advances in Neural Information Processing Systems, 2022, (Accepted at NeurIPS 2022 Conference).

@inproceedings{wang2021shaq,

title = {SHAQ: Incorporating Shapley Value Theory into Multi-Agent Q-Learning},

author = {Jianhong Wang and Jinxin Wang and Yuan Zhang and Yunjie Gu and Tae-Kyun Kim},

url = {https://openreview.net/forum?id=BjGawodFnOy

https://arxiv.org/abs/2105.15013},

year = {2022},

date = {2022-07-04},

urldate = {2021-01-01},

booktitle = {Advances in Neural Information Processing Systems},

journal = {arXiv preprint arXiv:2105.15013},

note = {Accepted at NeurIPS 2022 Conference},

keywords = {},

pubstate = {published},

tppubtype = {inproceedings}

}