Jasper Hoffmann

PhD Candidate

University of Freiburg

Jasper Hoffmann obtained his bachelor’s degree in mathematics in 2016 and his master’s degree in computer science in 2020 at the University of Freiburg. His master thesis was about the interference of function approximation in temporal differencing methods (reinforcement learning). From there he worked as a research assistant at the Computer Vision group Freiburg on neural network compression and out-of-distribution robustness. From there he started his PhD in June 2021 at the Neurorobotics Lab in Freiburg. He is especially interested in combining Model Predictive Control and Reinforcement Learning.

Project description

Classical planning methods can effectively control a single car by leveraging models of the car physics but have difficulties with the uncertainty and interaction complexity of multi-agent human driving required in fully autonomous driving. Thus, this PhD project is about extending optimal control methods with deep reinforcement learning to tackle these challenges. Important steps during the project are finding the right algorithmic framework and training method as well as successfully doing the transfer from simulation to the real system. Possible research directions could include offline reinforcement learning, planning on learned latent space models, or using differentiable optimization layers.

Zhang, Yuan; Hoffman, Jasper; Boedecker, Joschka

UDUC: An Uncertainty-driven Approach for Learning-based Robust Control Proceedings Article

In: ECAI 2024 - 27th European Conference on Artificial Intelligence - Including 13th Conference on Prestigious Applications of Intelligent Systems (PAIS 2024), pp. 4402-4409, IOS Press, Santiago de Compostela, Spain, 2024.

@inproceedings{zhang2024uduc,

title = {UDUC: An Uncertainty-driven Approach for Learning-based Robust Control},

author = {Yuan Zhang and Jasper Hoffman and Joschka Boedecker},

url = {https://arxiv.org/abs/2405.02598},

doi = {10.3233/FAIA241018},

year = {2024},

date = {2024-10-24},

urldate = {2024-10-24},

booktitle = {ECAI 2024 - 27th European Conference on Artificial Intelligence - Including 13th Conference on Prestigious Applications of Intelligent Systems (PAIS 2024)},

volume = {392},

pages = {4402-4409},

publisher = {IOS Press},

address = {Santiago de Compostela, Spain},

series = {Frontiers in Artificial Intelligence and Applications},

abstract = {Learning-based techniques have become popular in both model predictive control (MPC) and reinforcement learning (RL). Probabilistic ensemble (PE) models offer a promising approach for modelling system dynamics, showcasing the ability to capture uncertainty and scalability in high-dimensional control scenarios. However, PE models are susceptible to mode collapse, resulting in non-robust control when faced with environments slightly different from the training set. In this paper, we introduce the uncertainty-driven robust control (UDUC) loss as an alternative objective for training PE models, drawing inspiration from contrastive learning. We analyze the robustness of the UDUC loss through the lens of robust optimization and evaluate its performance on the challenging real-world reinforcement learning (RWRL) benchmark, which involves significant environmental mismatches between the training and testing environments.},

keywords = {},

pubstate = {published},

tppubtype = {inproceedings}

}

Schulz, Felix; Hoffman, Jasper; Zhang, Yuan; Boedecker, Joschka

Learning When to Trust the Expert for Guided Exploration in RL Workshop

2024, (ICML 2024 Workshop: Foundations of Reinforcement Learning and Control -- Connections and Perspectives).

@workshop{schulz2024learning,

title = {Learning When to Trust the Expert for Guided Exploration in RL},

author = {Felix Schulz and Jasper Hoffman and Yuan Zhang and Joschka Boedecker },

url = {https://openreview.net/forum?id=QkTANn4mRa},

year = {2024},

date = {2024-08-07},

urldate = {2025-08-07},

abstract = {Reinforcement learning (RL) algorithms often rely on trial and error for exploring environments, leading to local minima and high sample inefficiency during training. In many cases, leveraging prior knowledge can efficiently construct expert policies, e.g. model predictive control (MPC) techniques. However, the expert might not be optimal and thus, when used as a prior, might introduce bias that can harm the control performance. Thus, in this work, we propose a novel RL method based on a simple options framework that only uses the expert to guide the exploration during training. The exploration is controlled by a learned high-level policy that can decide to follow either an expert policy or a learned low-level policy. In that sense, the high-level skip policy learns when to trust the expert for exploration. As we aim at deploying the low-level policy without accessing the expert after training, we increasingly regularize the usage of the expert during training, to reduce the covariate shift problem. Using different environments combined with potentially sub-optimal experts derived from MPC or RL, we find that our method improves over sub-optimal experts and significantly improves the sample efficiency.},

note = {ICML 2024 Workshop: Foundations of Reinforcement Learning and Control -- Connections and Perspectives},

keywords = {},

pubstate = {published},

tppubtype = {workshop}

}

Hoffman, Jasper; Clausen, Diego Fernandez; Brosseit, Julien; Bernhard, Julian; Esterle, Klemens; Werling, Moritz; Karg, Michael; Boedecker, Joschka

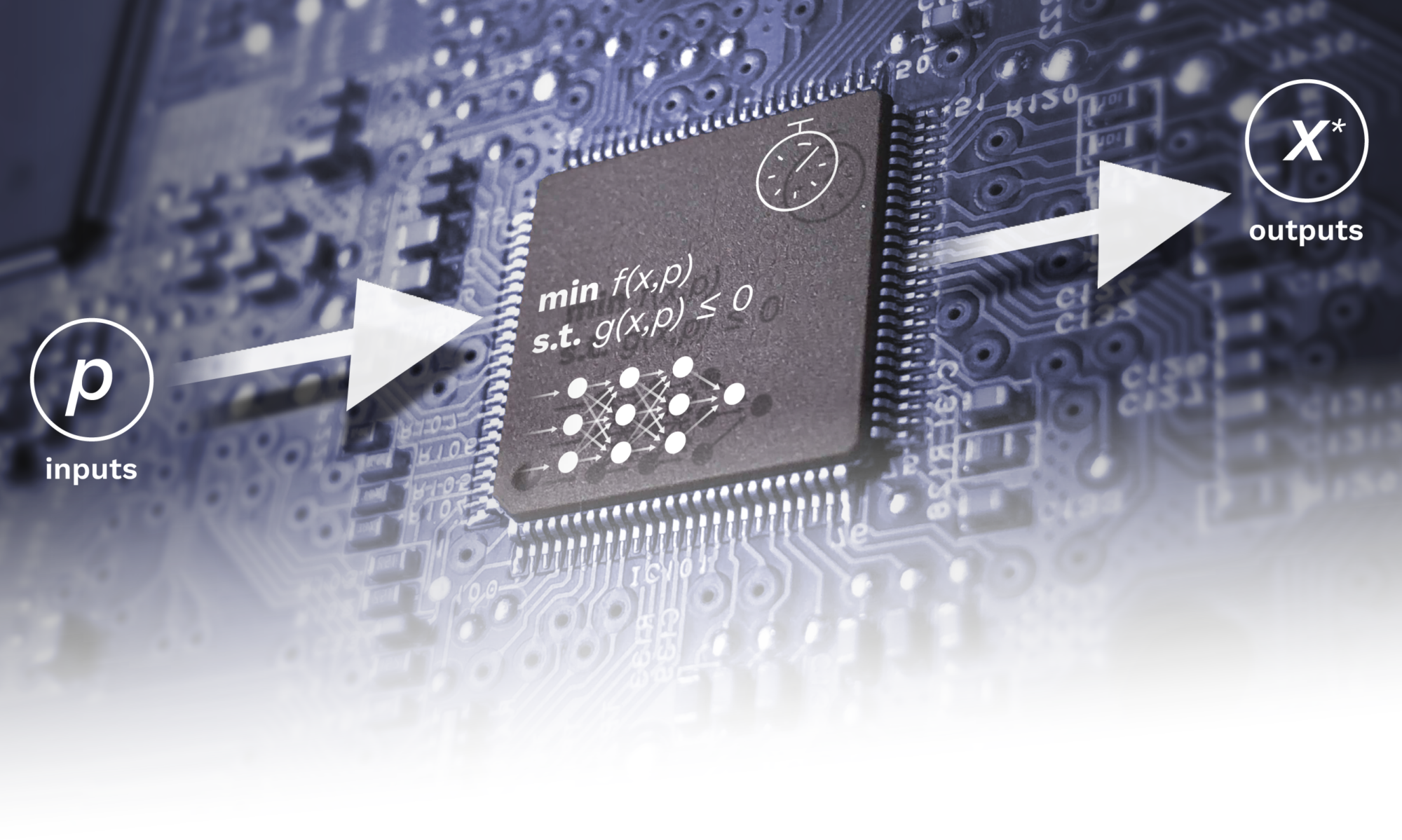

PlanNetX: Learning an efficient neural network planner from MPC for longitudinal control Proceedings Article

In: Proceedings of the 6th Annual Learning for Dynamics & Control Conference, pp. 1214-1227, PMLR, 2024.

@inproceedings{hoffmann_plannetx_2024,

title = {PlanNetX: Learning an efficient neural network planner from MPC for longitudinal control},

author = {Jasper Hoffman and Diego Fernandez Clausen and Julien Brosseit and Julian Bernhard and Klemens Esterle and Moritz Werling and Michael Karg and Joschka Boedecker},

url = {https://proceedings.mlr.press/v242/hoffmann24a.html

https://proceedings.mlr.press/v242/hoffmann24a/hoffmann24a.pdf},

year = {2024},

date = {2024-07-15},

urldate = {2024-07-15},

booktitle = {Proceedings of the 6th Annual Learning for Dynamics & Control Conference},

pages = {1214-1227},

publisher = {PMLR},

series = {Proceedings of Machine Learning Research},

abstract = {Model predictive control (MPC) is a powerful, optimization-based approach for controlling dynamical systems. However, the computational complexity of online optimization can be problematic on embedded devices. Especially, when we need to guarantee fixed control frequencies. Thus, previous work proposed to reduce the computational burden using imitation learning (IL) approximating the MPC policy by a neural network. In this work, we instead learn the whole planned trajectory of the MPC. We introduce a combination of a novel neural network architecture PlanNetX and a simple loss function based on the state trajectory that leverages the parameterized optimal control structure of the MPC. We validate our approach in the context of autonomous driving by learning a longitudinal planner and benchmarking it extensively in the CommonRoad simulator using synthetic scenarios and scenarios derived from real data. Our experimental results show that we can learn the open-loop MPC trajectory with high accuracy while improving the closed-loop performance of the learned control policy over other baselines like behavior cloning.},

keywords = {},

pubstate = {published},

tppubtype = {inproceedings}

}

Reiter, Rudolf; Ghezzi, Andrea; Baumgärtner, Katrin; Hoffman, Jasper; McAllister, Robert D; Diehl, Moritz

AC4MPC: Actor-Critic Reinforcement Learning for Nonlinear Model Predictive Control Working paper

2024.

@workingpaper{reiter2024ac4mpc,

title = {AC4MPC: Actor-Critic Reinforcement Learning for Nonlinear Model Predictive Control},

author = {Rudolf Reiter and Andrea Ghezzi and Katrin Baumgärtner and Jasper Hoffman and Robert D McAllister and Moritz Diehl },

url = {https://doi.org/10.48550/arXiv.2406.03995},

year = {2024},

date = {2024-06-06},

abstract = {Ac{MPC} and ac{RL} are two powerful control strategies with, arguably, complementary advantages. In this work, we show how actor-critic ac{RL} techniques can be leveraged to improve the performance of ac{MPC}. The ac{RL} critic is used as an approximation of the optimal value function, and an actor roll-out provides an initial guess for primal variables of the ac{MPC}. A parallel control architecture is proposed where each ac{MPC} instance is solved twice for different initial guesses. Besides the actor roll-out initialization, a shifted initialization from the previous solution is used. Thereafter, the actor and the critic are again used to approximately evaluate the infinite horizon cost of these trajectories. The control actions from the lowest-cost trajectory are applied to the system at each time step. We establish that the proposed algorithm is guaranteed to outperform the original ac{RL} policy plus an error term that depends on the accuracy of the critic and decays with the horizon length of the ac{MPC} formulation. Moreover, we do not require globally optimal solutions for these guarantees to hold. The approach is demonstrated on an illustrative toy example and an ac{AD} overtaking scenario.},

keywords = {},

pubstate = {published},

tppubtype = {workingpaper}

}

Reiter, Rudolf; Hoffman, Jasper; Boedecker, Joschka; Diehl, Moritz

A Hierarchical Approach for Strategic Motion Planning in Autonomous Racing Proceedings Article

In: 2023 European Control Conference (ECC), pp. 1-8, IEEE, Bucharest, Romania, 2023, ISBN: 978-3-907144-08-4.

@inproceedings{Reiter2023Hierarchical,

title = {A Hierarchical Approach for Strategic Motion Planning in Autonomous Racing},

author = {Rudolf Reiter and Jasper Hoffman and Joschka Boedecker and Moritz Diehl},

doi = {10.23919/ECC57647.2023.10178143},

isbn = {978-3-907144-08-4},

year = {2023},

date = {2023-07-17},

urldate = {2023-07-17},

booktitle = {2023 European Control Conference (ECC)},

pages = {1-8},

publisher = {IEEE},

address = {Bucharest, Romania},

abstract = {We present an approach for safe trajectory planning, where a strategic task related to autonomous racing is learned sample efficiently within a simulation environment. A high-level policy, represented as a neural network, outputs a reward specification that is used within the function of a parametric nonlinear model predictive controller. By including constraints and vehicle kinematics in the nonlinear program, we can guarantee safe and feasible trajectories related to the used model. Compared to classical reinforcement learning, our approach restricts the exploration to safe trajectories, starts with an excellent prior performance and yields complete trajectories that can be passed to a tracking lowest-level controller. We do not address the lowest-level controller in this work and assume perfect tracking of feasible trajectories. We show the superior performance of our algorithm on simulated racing tasks that include high-level decision-making. The vehicle learns to efficiently overtake slower vehicles and avoids getting overtaken by blocking faster ones.},

keywords = {},

pubstate = {published},

tppubtype = {inproceedings}

}

Ghezzi, Andrea; Hoffman, Jasper; Frey, Jonathan; Boedecker, Joschka; Diehl, Moritz

Imitation Learning from Nonlinear MPC via the Exact Q-Loss and its Gauss-Newton Approximation Proceedings Article

In: 2023 Conference on Decision and Control (CDC), pp. 4766-4771, IEEE, Singapore, Singapore, 2023, ISBN: 979-8-3503-0124-3.

@inproceedings{Ghezzi2023b,

title = {Imitation Learning from Nonlinear MPC via the Exact Q-Loss and its Gauss-Newton Approximation},

author = {Andrea Ghezzi and Jasper Hoffman and Jonathan Frey and Joschka Boedecker and Moritz Diehl},

url = {https://doi.org/10.48550/arXiv.2304.01782},

doi = {10.1109/CDC49753.2023.10383323},

isbn = {979-8-3503-0124-3},

year = {2023},

date = {2023-01-19},

urldate = {2023-01-19},

booktitle = {2023 Conference on Decision and Control (CDC)},

pages = {4766-4771},

publisher = {IEEE},

address = {Singapore, Singapore},

abstract = {This work presents a novel loss function for learning nonlinear Model Predictive Control policies via Imitation Learning. Standard approaches to Imitation Learning neglect information about the expert and generally adopt a loss function based on the distance between expert and learned controls. In this work, we present a loss based on the Q-function directly embedding the performance objectives and constraint satisfaction of the associated Optimal Control Problem (OCP). However, training a Neural Network with the Q-loss requires solving the associated OCP for each new sample. To alleviate the computational burden, we derive a second Q-loss based on the Gauss-Newton approximation of the OCP resulting in a faster training time. We validate our losses against Behavioral Cloning, the standard approach to Imitation Learning, on the control of a nonlinear system with constraints. The final results show that the Q-function-based losses significantly reduce the amount of constraint violations while achieving comparable or better closed-loop costs.},

keywords = {},

pubstate = {published},

tppubtype = {inproceedings}

}